Tech Radar

Id fraud assaults utilizing AI are fooling biometric safety methods

- Deepfake selfies can now bypass conventional verification methods

- Fraudsters are exploiting AI for artificial identification creation

- Organizations should undertake superior behavior-based detection strategies

The most recent World Id Fraud Report by AU10TIX reveals a brand new wave in identity fraud, largely pushed by the industrialization of AI-based assaults.

With tens of millions of transactions analyzed from July by means of September 2024, the report reveals how digital platforms throughout sectors, notably social media, funds, and crypto, are going through unprecedented challenges.

Fraud techniques have developed from easy doc forgeries to stylish artificial identities, deepfake pictures, and automatic bots that may bypass standard verification methods.

Social media platforms skilled a dramatic escalation in automated bot assaults within the lead-up to the 2024 US presidential election. The report reveals that social media assaults accounted for 28% of all fraud makes an attempt in Q3 2024, a notable soar from solely 3% in Q1.

These assaults give attention to disinformation and the manipulation of public opinion on a big scale. AU10TIX says these bot-driven disinformation campaigns make use of superior Generative AI (GenAI) parts to keep away from detection, an innovation that has enabled attackers to scale their operations whereas evading conventional verification methods.

The GenAI-powered assaults started escalating in March 2024 and peaked in September and are believed to affect public notion by spreading false narratives and inflammatory content material.

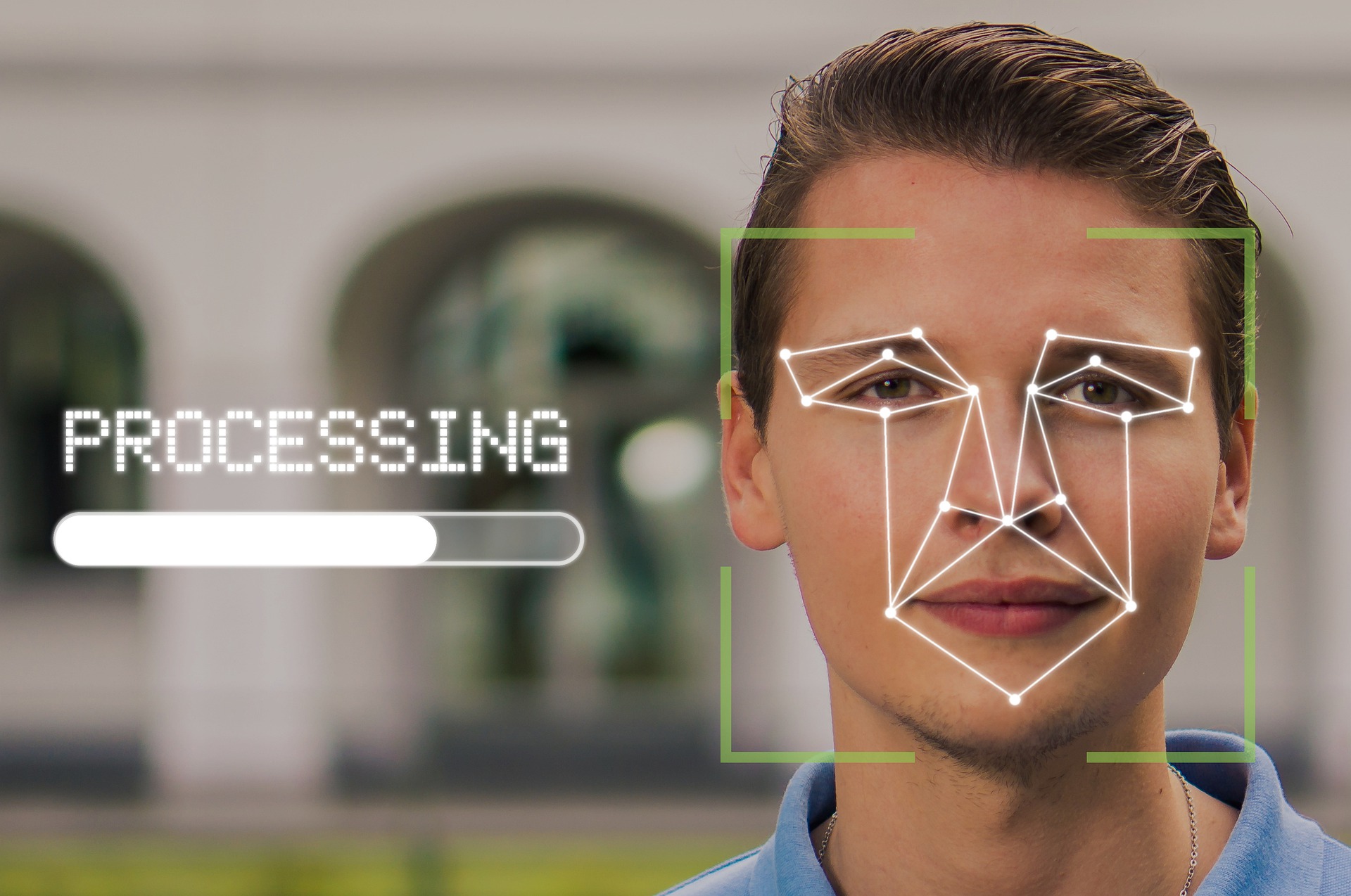

Probably the most placing discoveries within the report entails the emergence of 100% deepfake artificial selfies – hyper-realistic pictures created to imitate genuine facial options with the intention of bypassing verification methods.

Historically, selfies have been thought of a dependable technique for biometric authentication, because the expertise wanted to convincingly pretend a facial picture was past the attain of most fraudsters.

AU10TIX highlights these artificial selfies pose a singular problem to conventional KYC (Know Your Buyer) procedures. The shift means that shifting ahead, organizations relying solely on facial matching expertise could have to re-evaluate and bolster their detection strategies.

Moreover, fraudsters are more and more utilizing AI to generate variations of artificial identities with the assistance of “picture template” assaults. These contain manipulating a single ID template to create a number of distinctive identities, full with randomized photo parts, doc numbers, and different private identifiers, permitting attackers to rapidly create fraudulent accounts throughout platforms by leveraging AI to scale artificial identification creation.

Within the cost sector, the fraud price noticed a decline in Q3, from 52% in Q2 to 39%. AU10TIX credit this progress to elevated regulatory oversight and regulation enforcement interventions. Nevertheless, regardless of the discount in direct assaults, the funds trade stays essentially the most often focused sector with many fraudsters, deterred by heightened security, redirecting their efforts towards the crypto market, which accounted for 31% of all assaults in Q3.

AU10TIX recommends that organizations transfer past conventional document-based verification strategies. One vital advice is adopting behaviour-based detection methods that go deeper than normal identification checks. By analyzing patterns in consumer behaviour similar to login routines, visitors sources, and different distinctive behavioural cues, corporations can determine anomalies that point out probably fraudulent exercise.

“Fraudsters are evolving quicker than ever, leveraging AI to scale and execute their assaults, particularly within the social media and funds sectors,” mentioned Dan Yerushalmi, CEO of AU10TIX.

“Whereas corporations are utilizing AI to bolster safety, criminals are weaponizing the identical expertise to create artificial selfies and pretend paperwork, making detection nearly unimaginable.”

You may additionally like

Source link