Tech Republic

NVIDIA Unveils AI & Supercomputing Advances at SC 2024

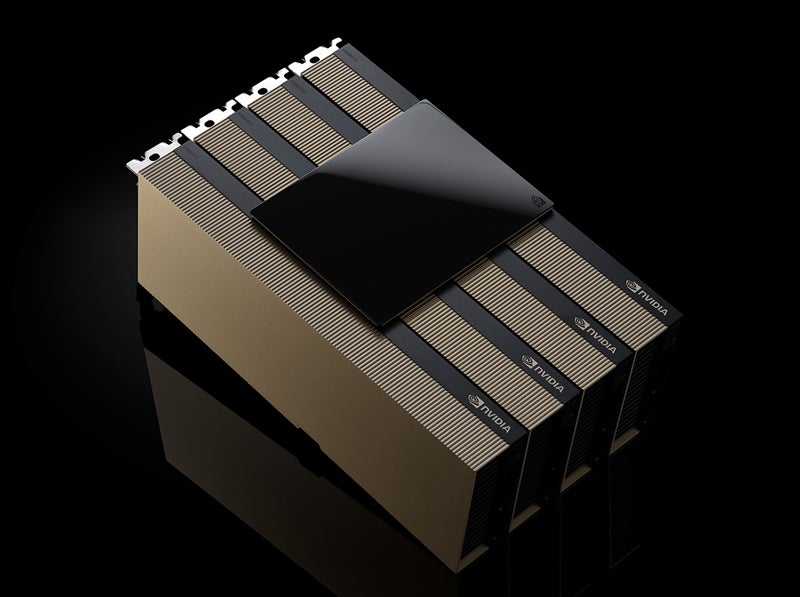

NVIDIA revealed numerous infrastructure, {hardware}, and assets for scientific analysis and enterprise on the Worldwide Convention for Excessive Efficiency Computing, Networking, Storage, and Evaluation, held Nov. 17 to Nov. 22 in Atlanta. Key amongst these bulletins was the upcoming normal availability of the H200 NVL AI accelerator.

The latest Hopper chip is coming in December

NVIDIA introduced at a media briefing on Nov. 14 that platforms constructed with the H200 NVL PCIe GPU shall be obtainable in December 2024. Enterprise clients can discuss with an Enterprise Reference Structure for the H200 NVL. Buying the brand new GPU at an enterprise scale will include a five-year subscription for the NVIDIA AI Enterprise service.

Dion Harris, NVIDIA’s director of accelerated computing, stated on the briefing that the H200 NVL is right for knowledge facilities with decrease energy — below 20kW — and air-cooled accelerator rack designs.

“Corporations can fine-tune LLMs inside a couple of hours” with the upcoming GPU, Harris stated.

H200 NVL exhibits a 1.5x reminiscence enhance and 1.2x bandwidth enhance over NVIDIA H100 NVL, the corporate stated.

Dell Applied sciences, Hewlett Packard Enterprise, Lenovo, and Supermicro will support the brand new PCIe GPU. It’s going to additionally seem in platforms from Aivres, ASRock Rack, GIGABYTE, Inventec, MSI, Pegatron, QCT, Wistron, and Wiwynn.

SEE: Corporations like Apple are working laborious to create a workforce of chip makers.

Grace Blackwell chip rollout continuing

Harris additionally emphasised that companions and distributors have the NV GB200 NVL4 (Grace Blackwell) chip in hand.

“The rollout of Blackwell is continuing easily,” he stated.

Blackwell chips are sold out by the subsequent 12 months.

Unveiling the Subsequent Section of Actual-Time Omniverse Simulations

In manufacturing, NVIDIA launched the Omniverse Blueprint for Actual-Time CAE Digital Twins, now in early access. This new reference pipeline exhibits how researchers or organizations can speed up simulations and real-time visualizations, together with real-time digital wind tunnel testing.

Constructed on NVIDIA NIM AI microservices, Omniverse Blueprint for Actual-Time CAE Digital Twins lets simulations that usually take weeks or months be carried out in actual time. This functionality shall be on show at SC’24, the place Luminary Cloud will present how it may be leveraged in a fluid dynamics simulation.

“We constructed Omniverse in order that every part can have a digital twin,” Jensen Huang, founder and CEO of NVIDIA, stated in a press launch.

“By integrating NVIDIA Omniverse Blueprint with Ansys software program, we’re enabling our clients to deal with more and more complicated and detailed simulations extra shortly and precisely,” stated Ajei Gopal, president and CEO of Ansys, in the identical press launch.

CUDA-X library updates speed up scientific analysis

NVIDIA’s CUDA-X libraries assist speed up the real-time simulations. These libraries are additionally receiving updates focusing on scientific analysis, together with modifications to CUDA-Q and the discharge of a brand new model of cuPyNumeric.

Dynamics simulation performance shall be included in CUDA-Q, NVIDIA’s growth platform for building quantum computer systems. The aim is to carry out quantum simulations in sensible instances — comparable to an hour as a substitute of a 12 months. Google works with NVIDIA to construct representations of their qubits utilizing CUDA-Q, “bringing them nearer to the aim of attaining sensible, large-scale quantum computing,” Harris stated.

NVIDIA additionally introduced the most recent cuPyNumeric model, the accelerated scientific analysis computing library. Designed for scientific settings that usually use NumPy applications and run on a CPU-only node, cuPyNumeric lets these tasks scale to hundreds of GPUs with minimal code modifications. It’s at the moment being utilized in choose analysis establishments.

Source link